If you do a text search for statice in Flora Graeca v3 on archive.org, which is at

https://archive.org/download/floragraecasive3sibt/floragraecasive3sibt.pdf

then the search is incredibly slow, with the bar very sluggishly going from page to page. Something like foxit finds the text immediately.

I would suppose SumatraPDF is unpacking the images to do the text search, which I would think are in JP2 format, very slow to unpack, which is quite unnecessary for a text search, whilst Foxit isn’t.

Presuming that is the case I would recommend the text search searches in the text without unpacking any images, or if something else, then I presume do whatever Foxit must be doing right…

Cheers! David

Home / Slow Text Search

Using latest Pre-Release it finds it at page 89 twice before the stupidly compressed quadruple size first page has rendered. One reason to never use compression beyond the basic need to reduce simple duplication.

The pages should have been scanned at half scale (25% the volume) the exceptionally high res simply borks the OCR (prefers 200-300dpi for OCR efficiency but then image can be dropped to 72-100dpi)

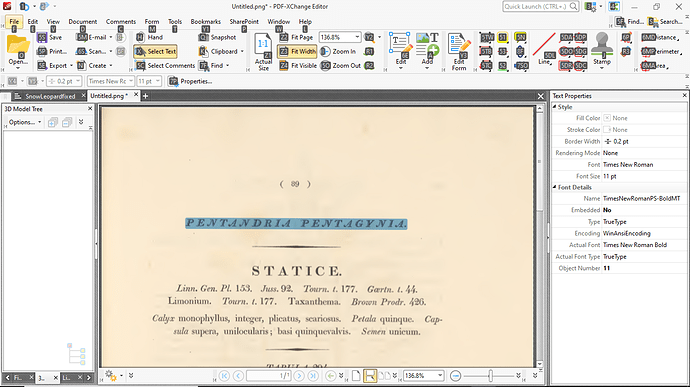

Pentandria Pentagynia comes out as PEJVTAJVBRIA P E JVT Jl GY JVIA. [DUH!]

IMHO its a waste of one robots time to set it up and then potentially thousands of human lives to decompress, (Bad Robot)

Unfortunately all the archive.org PDFs seem to suffer from this sluggish decompression (produced by those large-scale pre-copyright scanning efforts). I get the DJVU where available, but often there’s only a PDF. The actual OCR is variable, regularly quite good, but the rendering is a killer and I usually have to get IrfanView to convert the PDF to a folder of JPGs or if wished make a PDF of those JPGs but lose the OCR. It would be ideal if there was some program that could run through and recompress the JP2s within the PDF as JPGs with proper compression control and without losing the OCR/Text, but I’ve looked before and didn’t spot one. Either way, the Sumatra text search shouldn’t be being impacted in the way it is, beyond perhaps rendering the first visible page… d

You might try Xchange viewer/editor to repack images but I guess that triggers the demo stamps, it usually will also let you re-OCR often with better results, but which perhaps would be beter in say just some chapters of pages.

If bursting to resize pages (and re-OCR) then dropping bad OCR is not an issue.

I would convert pages to png or mono-tif (both lossless) rather than jpg at a scale where smallest letters are just big enough for OCR to work, that is the difficult bit to determine for a large document.

I’m not so worried about the image quality, so long as it’s readable and I can find text or navigate to find what I’m after, as I can then, knowing the page number, go if I need to the original for perfect quality (archive-org does also provide zips of the original JP2 which you can pull individual pages off) - it’s the navigation that’s the awful bit

I’ll relook at Xchange - having a demo stamp is a pain though, as sometimes I want to share the item with someone else and don’t want them to be ‘challenged’ by sluggishness.

Cheers, David

Searching was improved recently in pre-release compared with 3.2 so test latest SumatraPDF portable from this site

Will do - thanks!

Funnily my original post didn’t object to archive-org being mentioned but my subcomment was blocked for it (hence the dash in place of the dot).

d

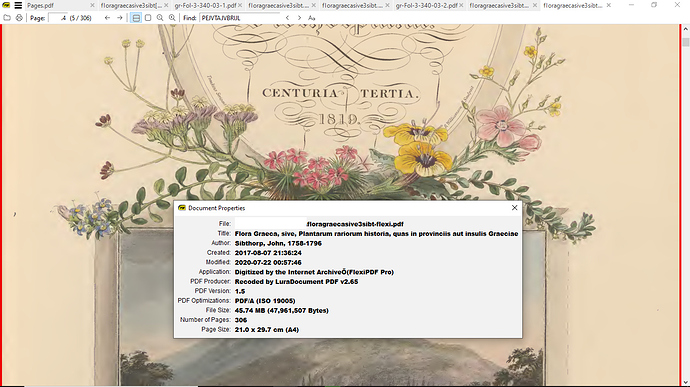

Not sure if you are aware there is an alternative source for those volumns

I now see the lare page size is to be expected but these are scanned at 1/13th the density thus suffer some similar OCR issues but they render much faster as they are not heavily compressed.

added bonus the volume 3 I looked at has bookmarks.

Since pages are about half in number I now see the volumes are divided parts Primus and secundus?

http://tudigit.ulb.tu-darmstadt.de/show/gr-Fol-3-340

Page numbering entries are all over the place ! How do you work with part one includes up to page 46 of part 2 !

Tried a few approaches and for my preference resized all pages to A4 using FlexiPDF. It is a compromise as pages are smaller than source but the effect on rendering time is much better whilst reasonable quality is maintained and filesize does not bloat too much. Searching is fast enough since its not impacted so much by the rendering